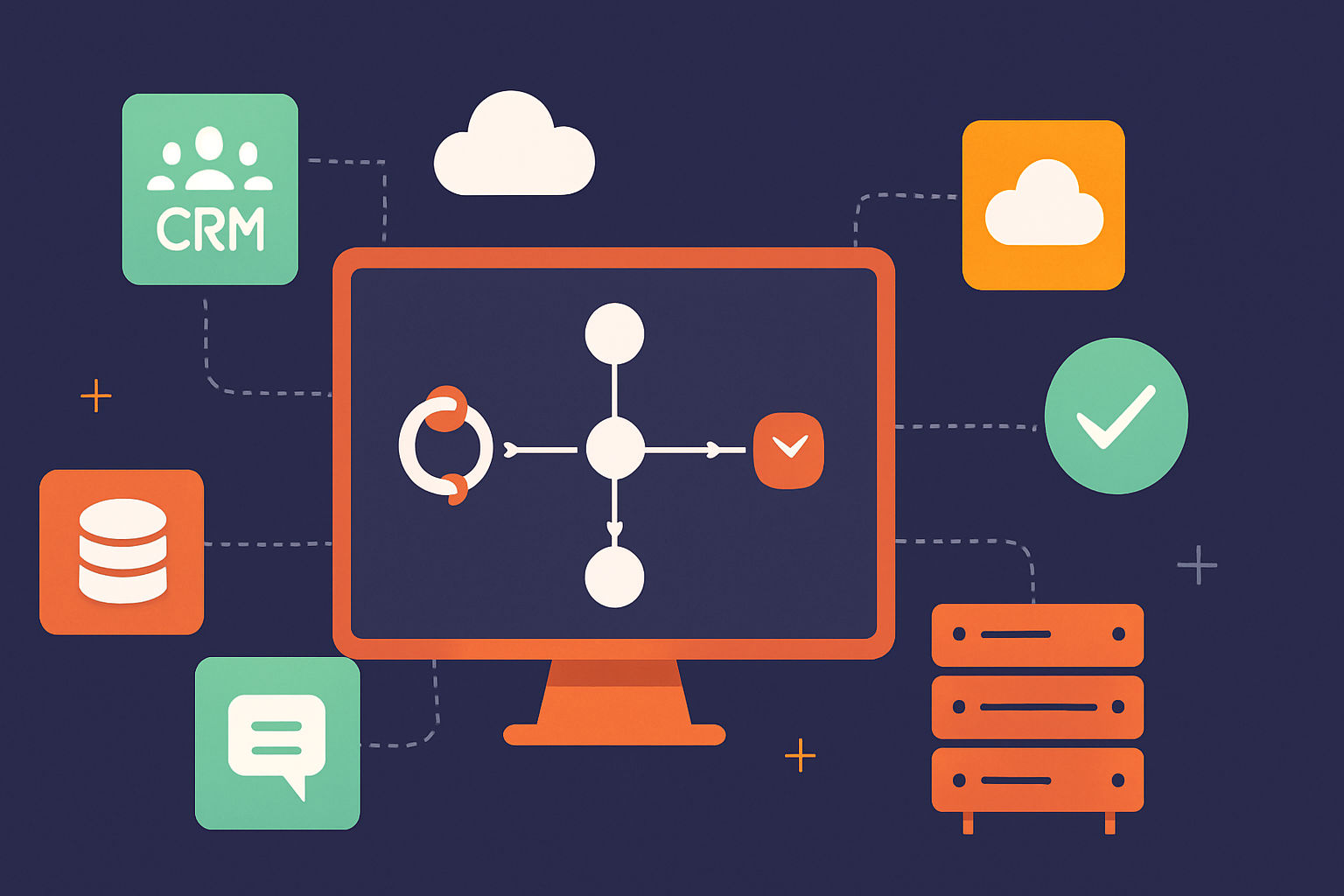

Automation in ERP should be visible, controllable, and governed. Too often, large backend jobs are tucked away in scripts and scheduled tasks that only developers or a few administrators understand. When complex work is surfaced as a simple, auditable record, organizations get safety, clarity, and broader ownership. This piece describes a pattern I use in NetSuite: modular, interface-driven automation where intent is a record, not a reason.

From scripts to records: shifting the surface of intent

Historically, ERP automation lives in scripts, scheduled jobs, and configuration files. Those artifacts are powerful but opaque to the business owners who must trust their outcomes. Interface-driven automation moves the expression of intent into a record — a first-class object in the system that users can create, review, clone, and approve.

This is not about hiding complexity. Developers still build robust services and remediation routines. The change is where complexity is expressed: behind a human-friendly surface that shows scope, filters, and expected actions.

What records buy you: safety and control

Modeling automation as records unlocks safety patterns that align with governance and audit expectations:

- Dry runs: A simulation shows what would change without committing.

- Logs and audit trails: Each job records who requested it, what filters were used, and the detailed outcome.

- Approval gates: Workflows can require explicit signoffs before execution.

- Reproducibility: Jobs can be cloned and re-run with the same inputs and attached audit trail.

These capabilities turn guesswork into a repeatable, traceable process. An analyst can validate intent, run a preview, obtain approval, and then execute a single auditable unit of work.

Empowering administrators and analysts

When automation is exposed as records, the people closest to the business become the agents of change rather than perpetual requesters of developer time. That matters in three ways:

- Faster iteration: Admins can tweak filters, run previews, and iterate without code deployments.

- Shared accountability: Jobs live with approvals and comments; responsibility is visible and trackable.

- Reduced developer load: Developers focus on building safe, well-tested services and APIs; admins consume them through predictable interfaces.

Conceptual example: the Cleanup Job

Imagine a Cleanup Job record in NetSuite. A typical lifecycle looks like this:

- Create: an analyst creates a Cleanup Job and selects a record type (Customer, Item, Transaction) and a saved search or filter set.

- Preview (dry run): the job runs in preview mode and returns a summary and a detailed candidate list with reason codes.

- Review: stakeholders inspect the candidate list, add comments, and attach a signoff or trigger an approval workflow.

- Execute: after approval, the job is scheduled or executed immediately. The process stores a pre-change snapshot for affected records.

- Audit: the job record contains a post-run outcome log, which records who ran it, when, which records were changed, and how.

Field examples on the Cleanup Job record: recordType, savedSearchId, remediationAction (Set Field / Remove Value / Merge), dryRun (boolean), previewSummary, candidateCount, preChangeSnapshotId, approvalStatus, executedBy, executedAt.

This pattern keeps heavy lifting in services but makes intent, scope, and outcomes explicit and discoverable.

Design principles for safe, auditable automation

To be effective, record-driven automation should follow clear principles:

- Idempotency: Jobs should be repeatable without unintended side effects. Use safe update patterns and track change tokens or timestamps.

- Observability: Inputs, expected outputs, and final results should be human-readable and discoverable on the job record.

- Granularity: Prefer multiple smaller, auditable steps over single monolithic sweeps. Break work into chunks you can meaningfully review.

- Least privilege: Governing who can create, approve, and execute jobs reduces risk. Map actions to roles.

- Transparency: Keep approval history, comments, and logs attached to the job record for easy review.

Beyond cleanup: where this pattern scales

Cleanup jobs are a concrete example, but the pattern applies broadly: reconciliations, archival tasks, bulk attribute updates, controlled imports, and staged data migrations all benefit from being modeled as records. Each job becomes a first-class artifact in change management — versioned, reviewable, and auditable.

Practical next steps

Start small and measure. A suggested path:

- Identify a low-risk, repetitive task (e.g., remove obsolete values, normalize a custom field).

- Model it as a job record with these core fields: scope (saved search), remediation action(s), dry-run flag, and a notes/approval section.

- Implement a preview mode that returns a candidate list with counts and sample records.

- Add a simple approval gate and post-run artifacts: pre-change snapshot and an outcome log.

- Measure impact: track cycle time, developer tickets avoided, and audit readiness improvements.

Expect a cultural shift: fewer hidden scripts, more shared review and ownership.

Closing reflection

Modular, interface-driven automation brings ERP work into the hands of people who understand the business. By translating technical operations into auditable records, organizations gain safety, governance, and clarity. It’s a practical design choice with outsized returns: predictable change, clearer accountability, and faster iteration. A record, not a reason.